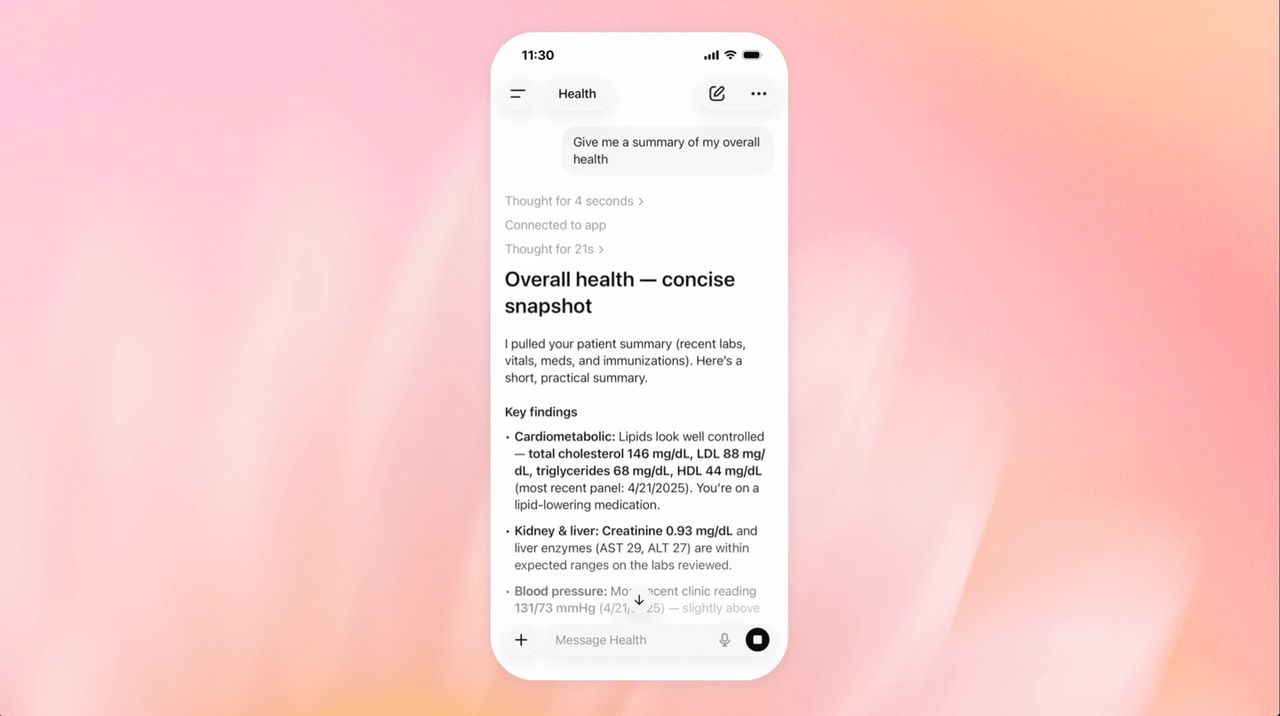

With 230 million people asking health-related questions each week, OpenAI created a dedicated Sandbox to ensure data security, aiming to assist users in analyzing lab results and preparing for medical appointments without using the data to train AI.

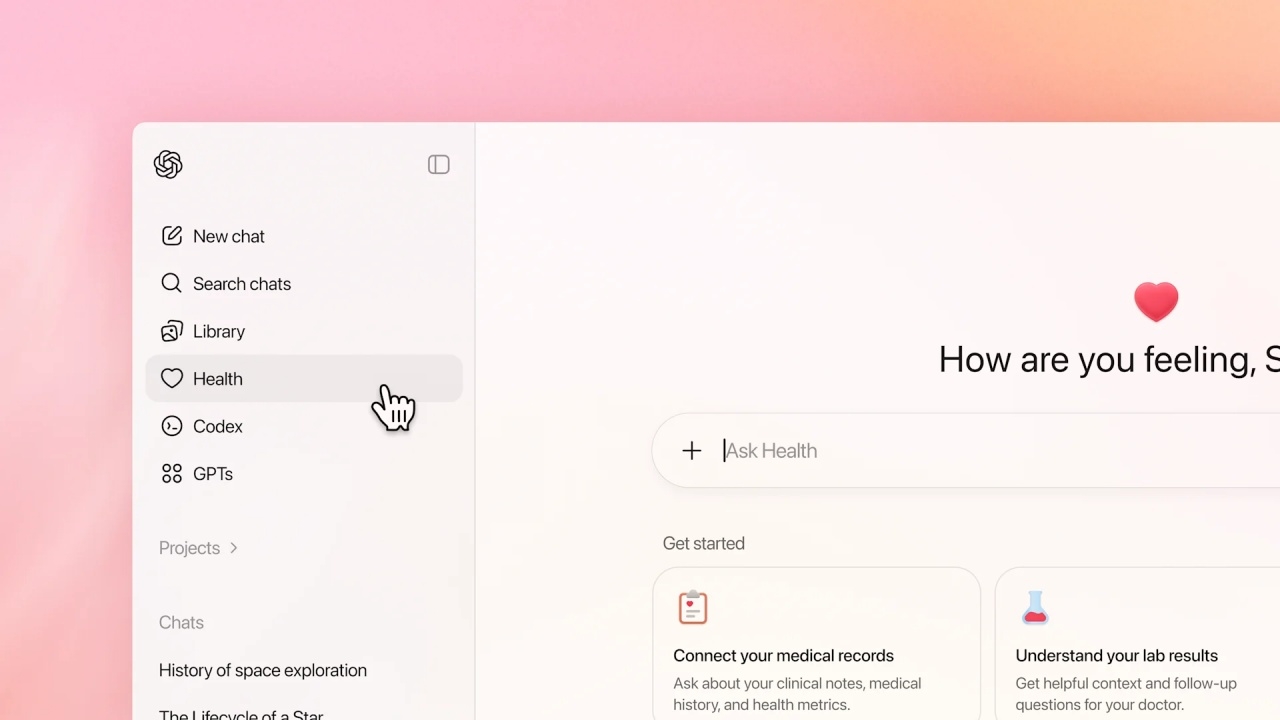

OpenAI officially announced the launch of ChatGPT Health, designed as a dedicated space specifically for health and wellness conversations after discovering that over 230 million users worldwide consult ChatGPT weekly for medical information.

ChatGPT Health strictly separates health-related conversations (sandboxing) from regular chats to prevent sensitive health context from leaking into general discussions.

However, ChatGPT can still reference information from standard user interactions; for example, if you asked ChatGPT in a regular chat to help plan marathon training, the Health system recognizes you as a runner to provide more precise fitness goal advice.

ChatGPT Health can connect with health applications such as Apple Health, Function, and MyFitnessPal, and can retrieve medical records in the U.S. through partners like b.well. OpenAI confirms that data and conversations within ChatGPT Health are protected with special encryption and will not be used to train AI models.

OpenAI emphasized that ChatGPT Health was created to help bridge gaps in public healthcare, such as cost issues, difficulty accessing physicians, and lack of continuous care. They also reiterated that ChatGPT Health is not meant for diagnosing or treating illnesses, noting the risk of AI hallucinations that can lead to inaccurate information. The system is designed to predict the most likely answers, which may not always align with correct medical principles.

/source:ChatGPT