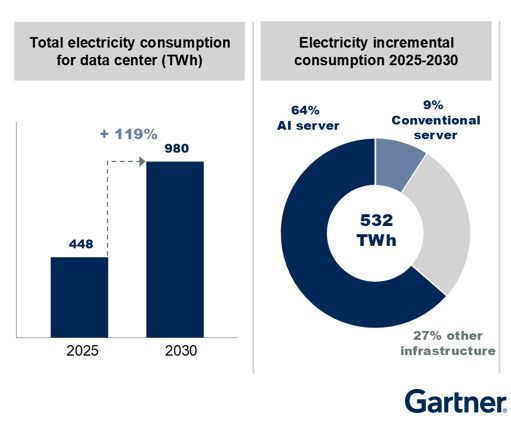

This year, the broader growth of AI challenges not only technological capabilities but also the global energy system. In unprecedented ways, the latest data from Gartner, a leading business and technology insights firm, clearly shows that global electricity demand from data centers will increase by 16% in 2025 and surge to double within the next five years, rising from 448 terawatt-hours (TWh) this year to 980 TWh by 2030. This reflects AI becoming a major global energy consumer and potentially a new bottleneck for the digital economy if energy infrastructure cannot keep pace.

Fundamentally, a data center is a digital factory requiring continuous 24-hour electricity to power servers, storage systems, networking equipment, and cooling systems. Energy use is typically measured in kilowatt-hours (kWh) or megawatts (MW), representing monthly or annual electricity consumption.

In scale, small data centers may consume only tens of kilowatts (kWh), but large or hyperscale data centers can use 10 megawatts (MW) or more, comparable to the electricity usage of a small city. This explains why expanding data centers to support AI is no longer just about real estate or IT systems but a challenge at the electrical grid and energy security level.

In the data center world, the focus is not only on total electricity use but also on efficiency, measured by Power Usage Effectiveness (PUE). For example,

In the AI era, servers have higher density and generate more heat than usual, making PUE a key competitive metric, comparable to labor costs or energy prices. What differentiates AI data centers from typical cloud data centers is the continuous energy demand profile driven by specialized servers like GPUs or AI-Optimized Servers.

Data indicates AI-Optimized Servers will account for nearly two-thirds of the increase in data center electricity consumption by 2030. Electricity use by these servers is projected to nearly quintuple, from 93 terawatt-hours (TWh) in 2025 to 432 TWh in 2030. This means that even with improved data center efficiency, AI's "appetite" will still push total energy demand higher.

This year, AI server services consume about 21% of total data center electricity, but by 2030, this figure will rise to 44%, driving 64% of the overall increase in energy consumption. In other words, AI data centers are building infrastructure that consumes vastly more electricity than the internet era.

The report also states that over two-thirds of global data center energy demand is concentrated in the United States and China. U.S. data center electricity use is expected to rise from 4% to 7.8% of the region's total electricity consumption by 2030, while Europe's share will grow from 2.7% to 5%. Interestingly, China holds a stronger position despite less dramatic growth compared to the U.S., for key reasons:

A major looming problem is that although AI is viewed as a future technology, its energy supply still heavily depends on fossil fuels. Gartner regards this as unsustainable, prompting the integration of new energy sources like hydrogen power, geothermal energy, and small modular nuclear reactors (SMRs) into the equation.

These technologies are expected to begin deployment at the "microgrid" level within data centers by the end of the decade. In the short term, natural gas remains the primary energy source, but within 3–5 years, Battery Energy Storage Systems (BESS) will play a key role in managing the volatility of renewable energy.

This report signals clearly that the AI race going forward is not only about models but also about "power capacity." Countries and organizations with ample, affordable, and stable electricity will hold the advantage, with China increasingly emerging as a competitor to the U.S.

China's advantage lies not just in the number of data centers but in lower energy costs per unit of computation, enabled by energy-efficient servers, system designs that handle high loads without raising PUE, and integrated energy infrastructure planned alongside data centers from the start. While many countries scramble to resolve electricity shortages following AI growth, China has already approached this as a national strategic priority.

By 2025, understanding data center energy consumption will no longer be a technical niche. In a world where AI becomes foundational infrastructure, economic costs and energy security must be jointly considered to reflect national-level impacts and the broader picture.

Read more

Follow the Facebook page: Thairath Money at this link -